Dataset Continuity Assembly File for 1912098369, 120828251, 8474674975, 1148577700, 2812046247, 36107257

The Dataset Continuity Assembly File for identifiers such as 1912098369, 120828251, and others plays a pivotal role in maintaining data integrity. It acts as a bridge, enabling stakeholders to trace dataset evolution effectively. This continuity mitigates risks associated with data decay and enhances analytical capabilities. Understanding its significance can reveal critical insights into data management practices and decision-making processes across various sectors. The implications of this continuity warrant further examination.

Importance of Dataset Continuity

The significance of dataset continuity lies in its capacity to ensure the integrity and reliability of data over time.

By maintaining robust data lineage and implementing effective version control, organizations can trace the evolution of datasets, mitigating risks associated with data decay.

This systematic approach fosters trust and empowers stakeholders, allowing them to leverage data confidently for informed decision-making while preserving the freedom to innovate.

Interconnectivity of Unique Identifiers

Unique identifiers serve as critical components that facilitate the interconnectivity of datasets across various systems and applications.

By providing a consistent reference point, unique identifiers enhance data interconnectivity, enabling seamless integration and retrieval of information.

This capability allows organizations to harness disparate data sources effectively, promoting efficient data management practices and ultimately fostering a more interconnected digital ecosystem that empowers users with enhanced access to information.

Methodologies for Preserving Data Integrity

Maintaining data integrity is paramount for ensuring reliable and accurate information across interconnected systems.

Methodologies such as rigorous data validation techniques and robust error detection mechanisms are essential. Employing checksums, audits, and automated validation scripts can significantly enhance accuracy, while real-time monitoring tools enable swift error identification.

Collectively, these strategies foster a resilient data environment, promoting transparency and trust in data-driven decision-making.

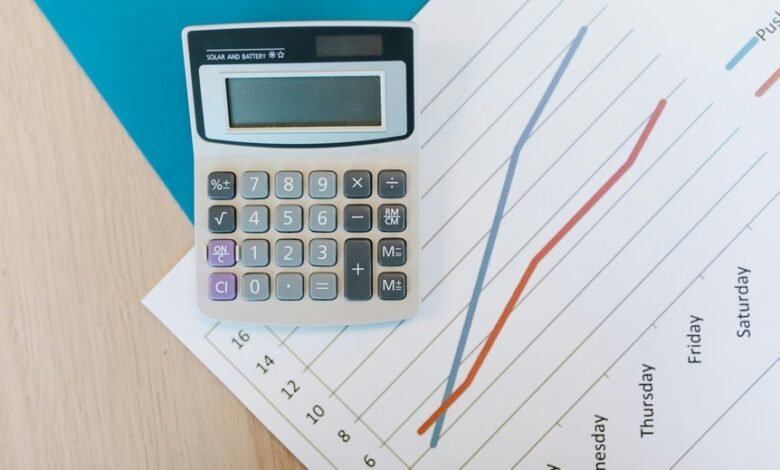

Enhancing Analytical Capabilities Through Continuity

While effective data continuity is often viewed as a foundational element in data management, it also serves as a catalyst for enhancing analytical capabilities across organizations.

By ensuring seamless data integration, organizations can leverage advanced data visualization techniques and harness predictive analytics, ultimately transforming raw data into actionable insights.

This strategic approach empowers decision-makers, fostering a culture of informed, agile responses to evolving business landscapes.

Conclusion

In summary, the Dataset Continuity Assembly File is not merely a tool; it is the grand architect of data integrity, a veritable fortress against the chaos of decay. By weaving together unique identifiers with the precision of a master craftsman, it transforms the mundane into the extraordinary. This file not only safeguards analytical capabilities but also empowers decision-makers to navigate the turbulent seas of market dynamics with unparalleled confidence, ensuring that data remains an unwavering beacon of clarity in an ever-changing world.